Healthcare today stands at the crossroads of rapid technological advancement and a deep responsibility to uphold patient safety. Artificial intelligence (AI) is reshaping the future of medicine, enhancing workflows, supporting diagnoses, and personalising treatment plans.

However, its integration into clinical environments must be carefully structured to avoid unintended consequences.

The tension between innovation and safety is not just regulatory; it’s operational, ethical, and human-centred. Recent studies and frameworks, such as SALIENT, highlight the importance of transparency, staged evaluation, and governance to ensure AI solutions are safe, effective, and trusted.

The Implementation Gap: Why Clinical AI Adoption Still Lags

Despite the buzz around AI in healthcare, widespread clinical deployment remains rare. In 2020 alone, over 15,000 AI-related publications emerged, but just 45 AI systems were deployed into clinical settings over an entire decade.

Source: Esmaeilzadeh, Pouyan. “Challenges and Strategies for Wide-Scale Artificial Intelligence (AI)

Deployment in Healthcare Practices: A Perspective for Healthcare Organizations.” Artificial

intelligence in medicine 151 (2024): 102861

This staggering gap reveals a key problem: a lack of structured, scalable pathways for evaluating and implementing AI tools.

Introducing SALIENT: A Framework for Safe AI Integration

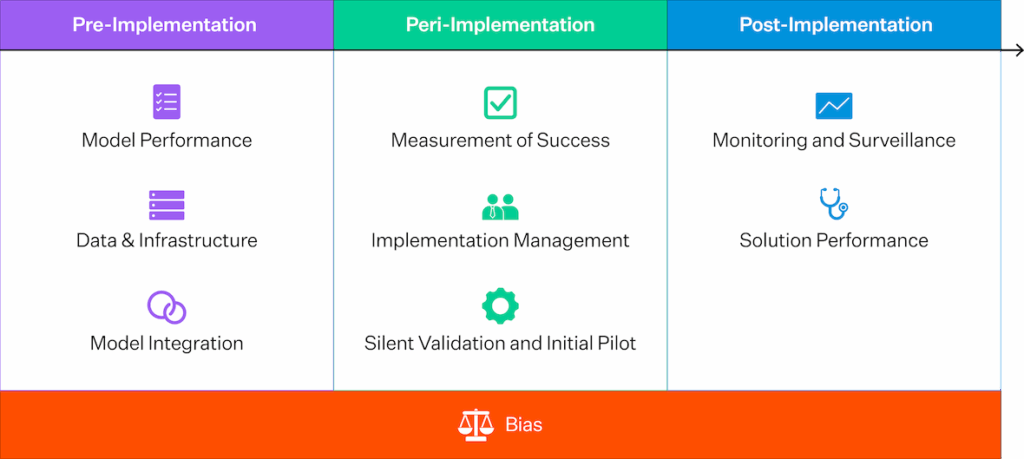

One promising approach is the SALIENT Framework, developed to guide the end-to-end implementation of clinical AI. It outlines five incremental stages from early definition to routine use, each designed to assess AI models for technical and clinical suitability.

Source: van der Vegt, Anton H et al. “Implementation Frameworks for End-to-End Clinical AI: Derivation of the SALIENT Framework.” Journal of the American Medical Informatics Association: JAMIA 30.9 (2023): 1503–1515

The SALIENT framework was developed by integrating relevant standards into each implementation stage, alongside insights from 20 international frameworks. According to Van der Vegt et al. (2023), it is the only clinical AI implementation framework offering full coverage of all reporting guidelines, providing a comprehensive starting point to ensure AI systems are thoroughly tested and fit for purpose.

The framework goes beyond model accuracy, incorporating elements such as:

- Human-computer interaction

- Clinical workflow alignment

- Infrastructure readiness

- Governance and oversight

This methodical process ensures that AI is technically sound but also usable and safe within real-world healthcare environments.

Building Trust Through Transparency and Governance

The need for transparent governance grows as AI systems become more agentic, adapting dynamically and collaborating with other tools. Agentic AI can bring significant benefits, such as reduced clinician burden and enhanced care quality, but only when clinicians trust the systems.

One emerging concept is “governing agents”—AI systems that monitor and enforce rules of operation. In high-risk areas like ICU discharge planning, these agents ensure AI stays within defined boundaries, keeping humans in the loop while amplifying its effectiveness.

Rethinking Regulation for Evolving AI

Emerging policies have sparked debate, such as the proposed U.S. legislation allowing AI to prescribe medication. Critics argue that existing regulations, designed for static algorithms, aren’t fit for purpose when it comes to autonomous learning systems.

Source: Wang & Beecy, 2024 (BMJ Evidence-Based Medicine)

To protect patients without stifling innovation, regulatory frameworks must evolve alongside technology. This means moving away from rigid rule sets toward dynamic, evidence-based governance models that can adapt to future risks and opportunities.

A Practical Lens: Viewing AI as ‘Normal’ Technology

Rather than seeing AI as a futuristic superintelligence, we should treat it like any other critical infrastructure comparable to electricity or the internet. This demystifies AI, shifting the focus from hype to pragmatic, institutional management.

By viewing AI through a lens of normalisation, healthcare organisations can integrate it more smoothly into existing processes, cultures, and accountability systems.

Moving Forward: How to Safely Scale AI in Healthcare

Innovation and safety are not mutually exclusive. Healthcare can harness AI’s potential with the right frameworks while protecting patients and clinicians. Success depends on:

- Thoughtfully staged implementation

- Human-centred design

- Continuous validation

- Transparent, adaptive governance

These principles offer a clear and credible path forward, ensuring that AI tools enhance, rather than disrupt, the care experience.

Ready to Navigate the Future of AI in Healthcare?

Orion Health is committed to advancing safe and scalable AI in clinical environments. Discover how our platform supports human-centred innovation through integrated data, modular solutions, and embedded governance.

References

Abràmoff, Michael D., and Tinglong Dai. “Incorporating Artificial Intelligence into Healthcare Workflows: Models and Insights.” Tutorials in Operations Research. Baltimore: Johns Hopkins University, 2023. https://doi.org/10.1287/educ.2023.0257.

Bajwa, Junaid, Usman Munir, Aditya Nori, and Bryan Williams. “Artificial Intelligence in Healthcare: Transforming the Practice of Medicine.” Future Healthcare Journal 8, no. 2 (2021): e188–e194. https://doi.org/10.7861/fhj.2021-0095.

Fox, Andrea. “EHRs and Agentic AI: Balancing Human and Automated Collaboration.” Healthcare IT News, March 18, 2025. https://www.healthcareitnews.com/news/ehrs-and-agentic-ai-balancing-human-and-automated-collaboration.

MacQueen, Alyx. “The Role of Agentic AI in Healthcare: Making a Difference in Workflows and Decision-Making.” Diginomica, March 18, 2025. https://diginomica.com/role-agentic-ai-healthcare-making-difference-workflows-and-decision-making.

Narayanan, Arvind, and Sayash Kapoor. AI as Normal Technology: An Alternative to the Vision of AI as a Potential Superintelligence. New York: Knight First Amendment Institute at Columbia University, April 15, 2025. https://knightcolumbia.org/content/ai-as-normal-technology.

van der Vegt, Anton H., Ian A. Scott, Krishna Dermawan, Rudolf J. Schnetler, Vikrant R. Kalke, and Paul J. Lane. “Implementation Frameworks for End-to-End Clinical AI: Derivation of the SALIENT Framework.” Journal of the American Medical Informatics Association 30, no. 9 (2023): 1503–1515. https://doi.org/10.1093/jamia/ocad088.