What Does ‘Equitable AI’ Really Mean?

AI has brought a surge of optimism to healthcare, enhancing diagnostics, tailoring treatments, and unlocking new efficiencies. But amid this progress, one critical element remains conspicuously absent: accountability.

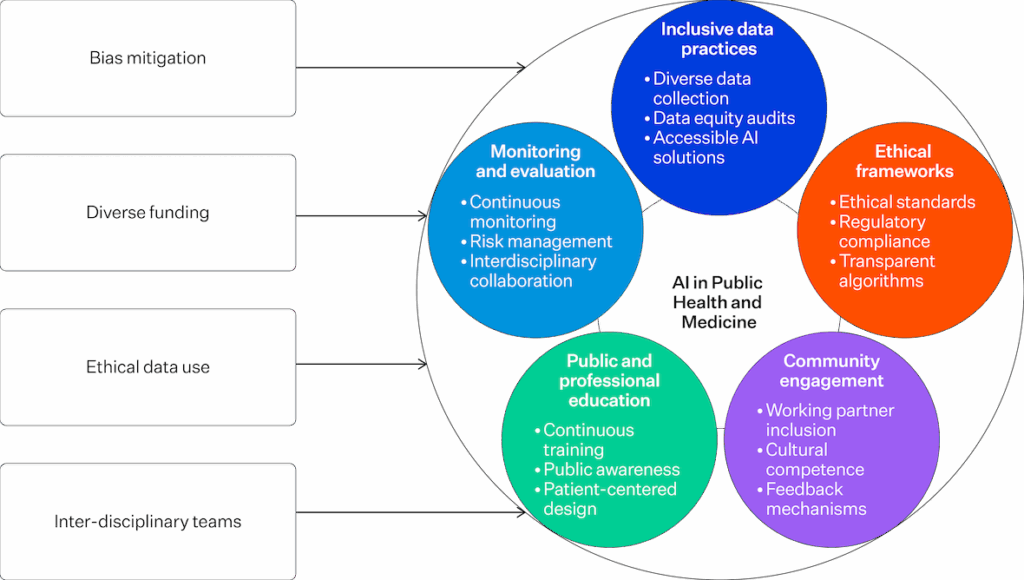

While AI holds vast potential to improve health outcomes and address long-standing inequities, it also risks deepening disparities, especially for marginalised communities. This risk arises when structural biases in data, design, and deployment go unacknowledged and unaddressed. If equity is the goal, then accountability must be its scaffolding.

Beyond Buzzwords: Defining Equitable AI

Equitable AI is now common in policy documents, marketing decks, and summit panels, but what does it mean?

According to the National Academy of Medicine, equitable AI must demonstrate, not just intend, fairness in its design, deployment, and access. Yet, in practice, these standards are often more aspirational than actionable.

Too often, equity is treated as a downstream metric, something to be measured after a model is built, rather than an upstream design imperative guiding how systems are conceived in the first place.

The Problem: Data Skew and Governance Gaps

Most AI models are trained on datasets drawn from high-income, English-speaking populations. This lack of representation leads to tools that underperform or fail when applied to diverse communities. Despite claims of neutrality, many systems amplify existing biases.

At the heart of this issue lies a governance vacuum. Who is responsible for equity in AI? Without a clear framework for accountability, equity remains a floating signifier, rhetorically powerful but practically toothless.

A.C.C.E.S.S. AI: Embedding Equity into the AI Lifecycle

Source: Health Affairs

The A.C.C.E.S.S. AI framework marks a step forward by embedding equity through community engagement and co-design. It calls on stakeholders to:

- Affirm their aims

- Clarify the scope

- Critically examine data sources

- Engage inclusively with impacted communities

This is not a task for data scientists alone. Clinicians, developers, and administrators all have a role in mitigating bias and building systems that serve everyone.

Source: CDC

The Cost of Inaction: From Bias to Data Colonialism

The stakes are real. Without robust governance, AI can become a tool of “data colonialism”, where community data is extracted without consent or benefit sharing. Indigenous and marginalised communities are particularly vulnerable.

Even well-intentioned systems risk becoming vehicles of dispossession unless enforceable rights around data governance are in place.

| Model Development – Assemble a multiparty stakeholder team with diverse representations and backgrounds. – Understand available AI technologies and training data for building and tuning models. – Converge on working definitions of fairness and equality of use of each AI-based intervention. – Perform AI capabilities and needs analysis, with explicit multistakeholder attention to implications for equity. – Develop model and review measures of model performance on representative workloads. – Document and address biases and gaps in data and performance with fairness tools. |

| Health System Integration – Seek input from local multistakeholder team on workflow vision leveraging integration of model into local workflow. – Ensure transparency and disclosure about operating characteristics of end-to-end system, potential biases, recommended uses, caveats on risk to equity. – Engage in limited testing of prototype with end-user representatives on realistic scenarios. – Iteratively refine systems based on feedback from stakeholders. – Revisit key question on equity in context of prototype end-to-end system. |

| Model Deployment – Engage in the phase of pragmatic trials with reporting to the multi-stakeholder team. – Iteratively refine system, testing new variants with trials. – Log usage for analytics. – Provide clinician and patient feedback tools and opportunities to collect qualitative input on AI capabilities in practice. – Monitor over time for drift of capabilities with changing frequency and nature of usage, demographics of population, short- and longer-term impact on population. – Revisit key questions on equity in context of prototype end-to-end system. – Periodically revisit key questions on equity, given logs and experiences. |

Source: Johnson KB, Horn IB, Horvitz E. Pursuing Equity With Artificial Intelligence in Health Care. JAMA

Health Forum. 2025 Jan 3;6(1):e245031. doi: 10.1001/jamahealthforum.2024.5031. PMID: 39888636.

Shifting from Concept to Architecture: The Role of Digital Testbeds

Bridging the gap between intent and implementation requires active infrastructure. Evaluation environments such as digital testbeds allow developers to test systems across subpopulations before deployment, ensuring they perform equitably in the real world.

The Path Forward: Accountability as a Standard

AI in healthcare is not inherently equitable; its outcomes depend entirely on the intentions, incentives, and infrastructures behind it. To centre equity without embedding accountability is to risk failure cloaked in progress.

We must shift the burden of proof:

No AI system should be deemed trustworthy until it demonstrates equitable performance across diverse populations and is governed by enforceable standards.

The next wave of health AI innovation must be governed by brilliance and justice. Only then can we reclaim equitable AI as more than a slogan—as a standard.

References

Dankwa-Mullan, Irene. 2024. “Health Equity and Ethical Considerations in Using Artificial Intelligence in Public Health and Medicine.” Preventing Chronic Disease 21:240245. https://doi.org/10.5888/pcd21.240245.

Garba-Sani, Zainab, Christina Farinacci-Roberts, Anniedi Essien, and Joseph M. Yracheta. 2024. “A.C.C.E.S.S. AI: A New Framework for Advancing Health Equity in Health Care AI.” Health Affairs Forefront, April 25. https://doi.org/10.1377/forefront.20240424.369302.

Harvard Medical School. 2025. “AI Implications for Health Equity: Shaping the Future of Health Care Quality and Safety.” Trends in Medicine, April 7. https://postgraduateeducation.hms.harvard.edu/trends-medicine/ai-implications-health-equity-shaping-future-health-care-quality-safety.

Johnson, Kevin B., Ivor B. Horn, and Eric Horvitz. 2025. “Pursuing Equity with Artificial Intelligence in Health Care.” JAMA Health Forum 6 (1): e245031. https://doi.org/10.1001/jamahealthforum.2024.5031.

Manríquez Roa, Tania, Markus Christen, Andreas Reis, and Nikola Biller-Andorno. 2023. “The Pursuit of Health Equity in the Era of Artificial Intelligence.” Swiss Medical Weekly 153:40062. https://doi.org/10.57187/smw.2023.40062.

Mateen, Bilal. 2025. “How We Can Future-Proof AI in Health with a Focus on Equity.” World Economic Forum, April 2. https://www.weforum.org/stories/2025/04/future-proofing-ai-in-health-why-we-must-prioritise-investments-in-evidence-infrastructure-and-equity/.

Velastegui, Sophia. 2024. “Harnessing AI to Bridge Healthcare Disparities.” Forbes, April 9. https://www.forbes.com/sites/committeeof200/2024/04/09/harnessing-ai-to-bridge-health-care-disparities/.