The healthcare industry is experiencing an AI revolution. From diagnostics to workflow optimisation, AI promises transformative change. But beneath the hype, a sobering reality remains: more than 80% of AI projects fail, squandering billions in resources and eroding trust.

In a sector where stakes are literally life and death, that failure rate demands scrutiny. Are we asking the right questions about AI’s role in healthcare? Evidence suggests we aren’t.

AI Investment is booming, but are outcomes keeping up?

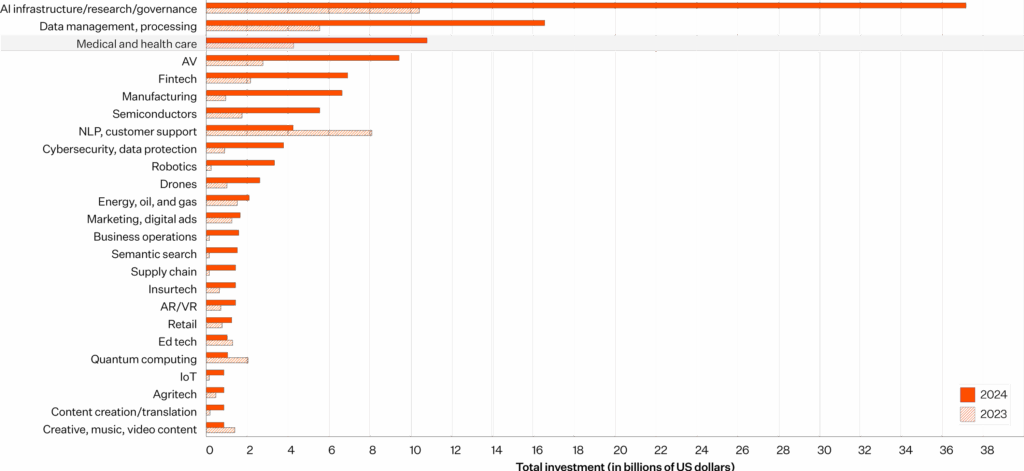

The global AI investment landscape is surging. In 2024 alone, private investment in AI reached a record $252.3 billion, with healthcare as one of the biggest beneficiaries. Regulatory progress reflects this growth: the number of FDA-approved AI-enabled medical devices has soared from 6 in 2015 to 223 in 2023.

And yet, despite these impressive numbers, the reality on the ground is more complex. AI’s pace of advancement has outstripped traditional development and governance frameworks, exposing cracks in adoption strategies.

Source: 2025 AI Index Report

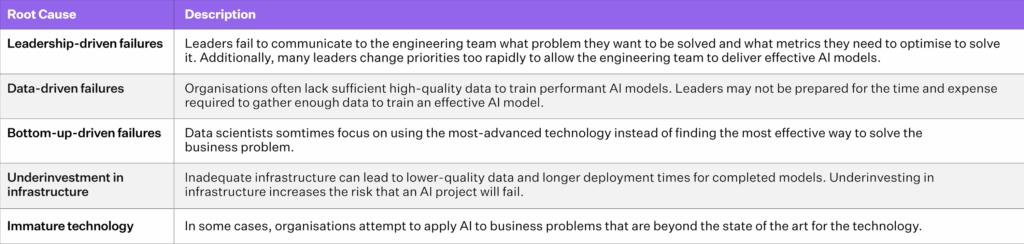

Why AI projects fail: A familiar pattern of missteps

A recurring issue across industries is the “build it, and they will come” mindset. Many organisations focus on technological novelty without considering clinical fit or long-term integration.

This tendency is especially dangerous in healthcare. Projects often mimic historical data rather than reliably predicting future outcomes, a critical flaw when accuracy can impact lives. Too often, teams chase hype without deeply understanding the clinical workflows and operational challenges they hope to improve.

Source: Ryseff, de Bruhl, and Newberry (2024)

Problem definition: The first and most common failure point

Poor problem definition is one of the top reasons AI projects falter. Healthcare organisations must ask: Is this truly a problem AI can solve?

AI excels at pattern recognition and prediction but struggles with causal reasoning and contextual understanding. Expecting AI to replace human clinical judgment is not only premature, it’s dangerous. Sophisticated models remain only as good as the data on which they are trained.

The Hidden Risk: Data Quality and Bias

Gartner estimates that 85% of AI models fail due to poor data quality.

This risk is magnified in healthcare, where data is fragmented across electronic health records (EHRs), labs, and imaging systems, often inconsistently structured and full of gaps.

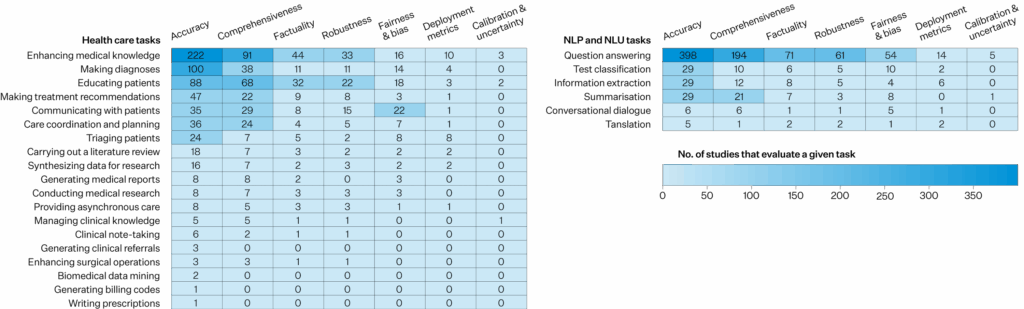

Source: JAMA (2025)

Without rigorous data curation, models may reinforce existing biases or generate clinically unsafe recommendations. Unfortunately, many organisations skip over the groundwork of data readiness, diving into implementation before establishing trustworthy data pipelines. A foundational misstep akin to building a skyscraper on sand.

Measuring AI’s value: It’s more than accuracy.

Many healthcare AI projects boast about improved diagnostic precision or efficiency gains but stop short of asking more holistic questions:

- Does this tool improve patient outcomes?

- Does it ease the clinician’s workload or add to it?

- Is it aligned with our principles of equity and quality of care?

The 2025 AI Index reports that while 78% of organisations used AI in 2024, most remain in early adoption phases and report modest financial returns.

Building an adaptive AI strategy in healthcare

To succeed, healthcare organisations must shift from rigid procurement cycles to agile, test-and-learn strategies. Budgeting for iteration, learning, and even failure should be part of the AI roadmap. Transparency is also key; organisations must be willing to report AI limitations, not just successes.

Most importantly, healthcare leaders, regulators, and AI developers must align on a new set of rigorous evaluation criteria. This means being honest about:

- The problem AI is solving

- The data quality and its biases

- The measurable impact on patient care

- The organisation’s capacity to adapt

Asking the right questions is the first step to success.

The promise of AI in healthcare is too important to squander on poor execution. To unlock its full potential, the industry must move beyond the hype and start asking tough, fundamental questions.

Getting AI right in healthcare isn’t about building more; it’s about building smarter, with clinical relevance, trust, and long-term impact at the centre.

References

- AI Index Steering Committee, Institute for Human-Centered AI, Stanford University. Artificial Intelligence Index Report 2025. Stanford, CA: Stanford University, 2025.

- Little, Jason. “Failed AI Projects Are a Feature, Not a Bug.” LinkedIn, April 26, 2025. https://www.linkedin.com/pulse/failed-ai-projects-feature-bug-jason-little-kbm8e/.

- Jowi Morales. “Research Shows More than 80% of AI Projects Fail, Wasting Billions of Dollars in Capital and Resources: Report.” Tom’s Hardware, August 29, 2024. https://www.tomshardware.com/tech-industry/artificial-intelligence/research-shows-more-than-80-of-ai-projects-fail-wasting-billions-of-dollars-in-capital-and-resources.

- Bojinov, Iavor. “Keep Your AI Projects on Track.” Harvard Business Review, November–December 2023. https://hbr.org/2023/11/keep-your-ai-projects-on-track.

- Francis, Jameel. “Why 85% of Your AI Models May Fail.” Forbes, November 15, 2024. https://www.forbes.com/councils/forbestechcouncil/2024/11/15/why-85-of-your-ai-models-may-fail/.